Databases Will Inherit the Earth

Databases are at the heart of every application.

Whether you are booking a flight on Expedia, ordering up a ride on Uber or posting your latest vacation photo on Facebook, you are talking to a database somewhere. Databases are the center of everything that is done in the computing world.

For a decade now, we have been in love with NoSQL databases, represented by platforms like MongoDB and Cassandra. NoSQL has become so popular, some in the industry even predicted the demise of the "older" relational database (RDBMS). But even with all the momentum in NoSQL, whenever you visit tech companies like Twitter or Facebook and ask about their data architecture, all still use an RDBMS for their transactional systems. Why? To understand this better, let’s take a walk down database memory lane.

Databases from the 80s, 90s and early 2000s were largely relational databases that spoke a language called ANSI SQL. Being relational, these databases all had well-defined structures (or "schemas") with extremely reliable and persistent column- and row-based architectures. Their biggest benefit was that their data was always consistent and it was easy for developers to build applications on top of them.

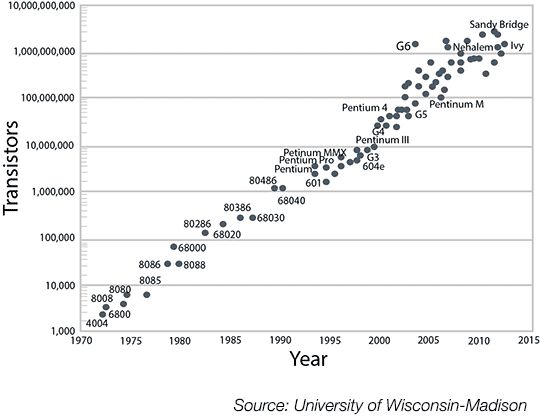

The rise of the relational database corresponded with the rise of companies and projects that are now part of the lingua franca of all developers. (Oracle, MySQL and Postgres are all commonly used variants of SQL databases.) The challenge, however, with these relational databases was that they were designed to run on single nodes in order to maintain consistency and generally needed to "scale up"—in other words, run on a bigger, more expensive and more powerful server—in order to deal with large amounts of data. Growth was therefore dependent on Moore’s Law, which famously predicts that a computer’s processing power will double every two years.

[Moore's law (/mɔərz.ˈlɔː/) is the observation that the number of transistors in a dense integrated circuit doubles approximately every two years]

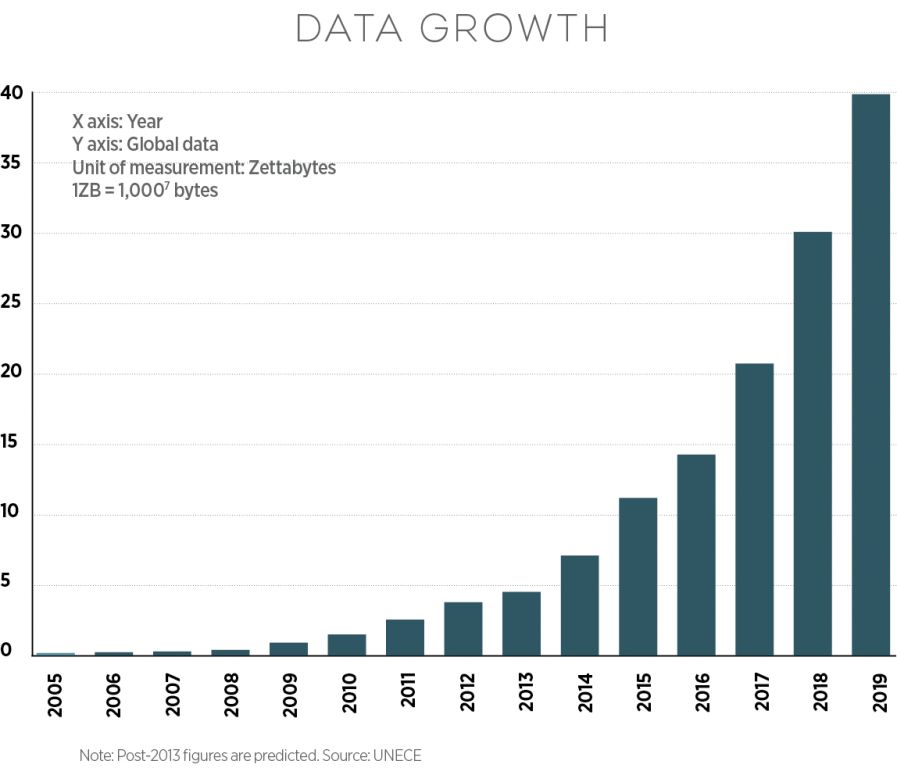

Then, this thing called the Internet came along. With the millions of websites, mobile devices, apps, etc., the amount of data being produced by computing systems began to grow dramatically. So fast, that it began to outpace Moore’s law. Here is a chart showing the raw data growth:

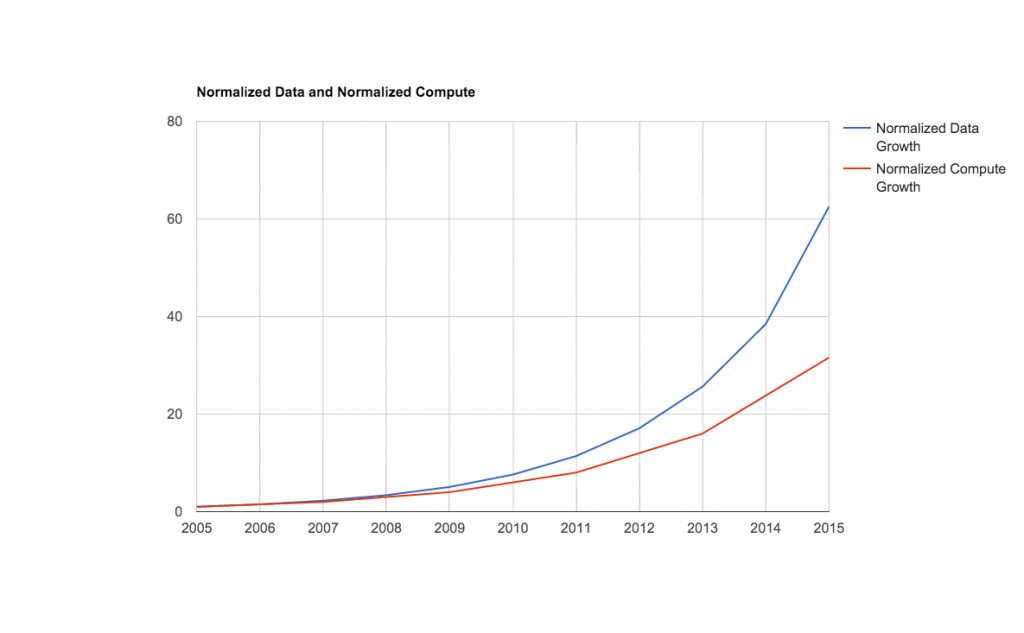

And here is a version which shows how much faster data is growing than Moore's law:

This conditions created situations where traditional relational databases ran out of single-node capacity. So, to address that developers and database administrators began a practice called "sharding"—that is, splitting a database into multiple smaller databases. The problem with sharding is that each copy of the database contained different data, so applications have to be written with some a priori knowledge of where the data is. That's a big problem at scale.

To address this problem, NoSQL databases became popular in the 2000s. These databases had some very desirable characteristics. First they wrote data multiple—typically three—times across multiple nodes. That made NoSQL databases inherently more reliable. Second, the replicas could all perform reads and writes, so they promised massive scale and geographic distribution. And, finally, they did not have strict schemas. This meant you could enter documents or forms into the database, which allowed for massive scale and geographic distribution.

But all these good NoSQL things came with some serious compromises. First, the data was consistent, but was only eventually consistent, so applications might see different data points depending on which node on the database they were talking to (imagine checking your credit card balance and getting two different results depending on which copy of the database you talked to!). Secondly, the lack of schemas made writing transactional applications a lot harder for developers.

In an ideal world, you’d want a database system that was both scalable and strongly consistent. The challenge is that it’s a really hard computer science problem to do both. But, if you could do it, you’d get the best of both worlds.

Google, with all of its smart people, did just that. In 2012, they built a hybrid system called Spanner that combined the usability and transactional guarantees of a relational database with the scalability of a NoSQL system. The only problem? Spanner is not open source and while Google will release it to the Google Cloud Platform, it won’t be available elsewhere.

Two programmers that were impressed by Spanner were Spencer Kimball and Peter Mattis. Friends since their undergraduate days at Berkeley in the 1990s, the former roommates cut their teeth in open source by developing the hugely popular image editing software GIMP which just celebrated its 20th birthday. The two also ended up working for Google for nine years, albeit not on Spanner. The centerpiece of their work was Colossus, Google’s Distributed Files System, which spoiled them in terms of working on great distributed systems. At Google, they also met a brilliant young software developer in Ben Darnell.

After leaving Google, Spencer, Ben and Peter co-founded the photo-sharing app startup Viewfinder, which was eventually acquired by Square. As they assimilated into Square’s infrastructure, they began imagining the possibilities for a new database project.

Enter the Cockroaches

This new database has a quirky name. Cockroaches are popularly depicted as dirty pests, but they are actually quite brilliant in their design. Not only have they been around since before the dinosaurs, but they are able to thrive in a variety of inhospitable habitats around the world. Cockroaches also display collective thinking and are insanely hardy, able to survive a month without food and up to 45 minutes without air. In short, they are survivors, and they are resilient.

Survivability and resilience make cockroaches the perfect mascot for Spencer, Peter and Ben’s new company, Cockroach Labs, and first product, CockroachDB. This database combines the attributes we love about relational and NoSQL systems into a single, easy-to-use, cloud-friendly product. CockroachDB scales, survives disasters and is always consistent. It’s designed to outlast any disaster (natural or man-made), and if a server—or worse, an entire datacenter— goes dark, CockroachDB provides continuous service and keeps applications functional long before human help is available. It is also open source, allowing like-minded developers and engineers the opportunity to rub antennae and share ideas on how to improve the system.

Not bad for a little critter.

We got to know the founding team a couple of months ago in New York. To be honest, we were initially a bit skeptical that a database project of this ambition would find its origins in New York. But, the more we learned about the team and what they had accomplished, the more we got excited. CockroachDB is now entering its beta phase and ready to be taken for a test drive. We think that many of its users will be quite impressed.

We are also delighted to announce that Index is leading the $20 million Series A1 in Cockroach Labs. We look forward to supporting this great team for as long as their namesakes will be around—that is, for a very long time.

Posted on 30 Mar 2016

Published — March 30, 2016