Plumbing for Data

Twenty years ago, when I was at Cisco in the early days, I learned how sexy plumbing could be in a digital world.

On the surface, what Cisco did was pretty mundane. We used TCP/IP to connect computers in a network: How transformational could it be, really? And how much of this networking stuff did we actually need? I recall inventing countless analogies that would explain to the lay person what Internet routing was all about: electronic mailmen, Fedex packages and so forth. But even after 15 to 20 minutes of explanations, I was often met with blank stares from the innocent victims of my narrative.

As it turned out, all that Internet plumbing became $30 billion per year of revenue and Cisco became a company worth $150 billion. As businesses go, that’s pretty sexy. The lesson here is that the network—whether one made for packets (like Cisco’s) or one made for humans (like Facebook’s)—is hugely strategic. Networks are in the unique position of being able to improve themselves for every extra entity they connect. Additionally, they are enormously hard to replace because doing so would affect every member of that network. These factors contribute to them becoming big and valuable businesses.

Today, the TCP/IP plumbing that we built at Cisco has matured and isn’t growing as quickly any more, but there is a whole new revolution going on which is all about data. Namely, businesses around the world have figured out that they have a lot of it—and it can be really valuable, whether it’s used to improve products, monitor health or find natural resources. So the big business opportunity now is figuring out how to harness all that data. Getting value out of data requires two major tasks. The first is that data has to be transformed into insight so that enterprises big and small, and in virtually every industry vertical, can take strategic action based on that data. We jumped on that bandwagon and invested in Interana because they offer an amazing service to make sense out of large swaths of data.

The second major task is getting the right data into the hands of the right applications and people at the right time. This task seems easy and trivial, but the reality is that there are a number of issues with data plumbing. The first problem is that data today is growing exponentially and most of the plumbing that was built for data—even as recently as five years ago—isn’t designed to handle the massive volume that is currently streaming across a business. Furthermore, the data for most organizations sits in silos and belongs to the application that created it (sensors, clickstreams, etc.). This means that it can be both hard to find and extract. Even when the “owner” of the data wants to publish it, the connective tissue between the consumer of that data and the publisher is a one-off API, making for challenging manageability as the volume of data sets grows. Plus, consumers of the data, such as marketing, finance and product teams, often need it to be in a format that is different from how it’s being published, so the data needs to be transformed before the consumer can actually make use of it.

This collection and organization of one-off connections between publishers and consumers can quickly get out of hand and result in a data hairball. For any organization needing to manage this level of complexity, there needs to an a network. A network for big data.

Enter Kafka

At the heart of the LinkedIn data infrastructure group, Jay Kreps, Neha Narkhede and Jun Rao were seeing this data plumbing problem become more and more untenable by the day. As a professional social network, LinkedIn had massive amounts of data about people’s personal and work connections, and was supporting multiple apps and a social graph system, generating millions of personalized feeds, handling over a billion searches a day, and performing countless other tasks. Additionally, each of the company’s data publishers and subscribers at the time was stitched together with different protocols, APIs, connectors and snippets of code. Needless to say, the job of quickly and accurately publishing all of LinkedIn’s massive streams of data to the right applications was enormously difficult.

To deal with the complexity, inaccuracies and redundant work, the three decided to architect a different system that could simplify the interconnection of enterprise data and be the single “network” between publishers and consumers. First, the trio knew their system needed to be hugely scalable and able to serve as the central data backbone for a large corporation. The system also needed to accommodate the massive volumes of real-time data that were being produced on one end and ingested by disparate subscribers on the other end, and have a buffer memory to accommodate asynchronous publishers and subscribers.

Jay, Neha and Jun named their product Kafka. The open-source project quickly took hold in various parts of the LinkedIn environment and before long, it became the heart of the company’s data infrastructure. Being an open source project, it was also slowly but surely discovered (and adopted) by other developers facing the same plight at their own companies.

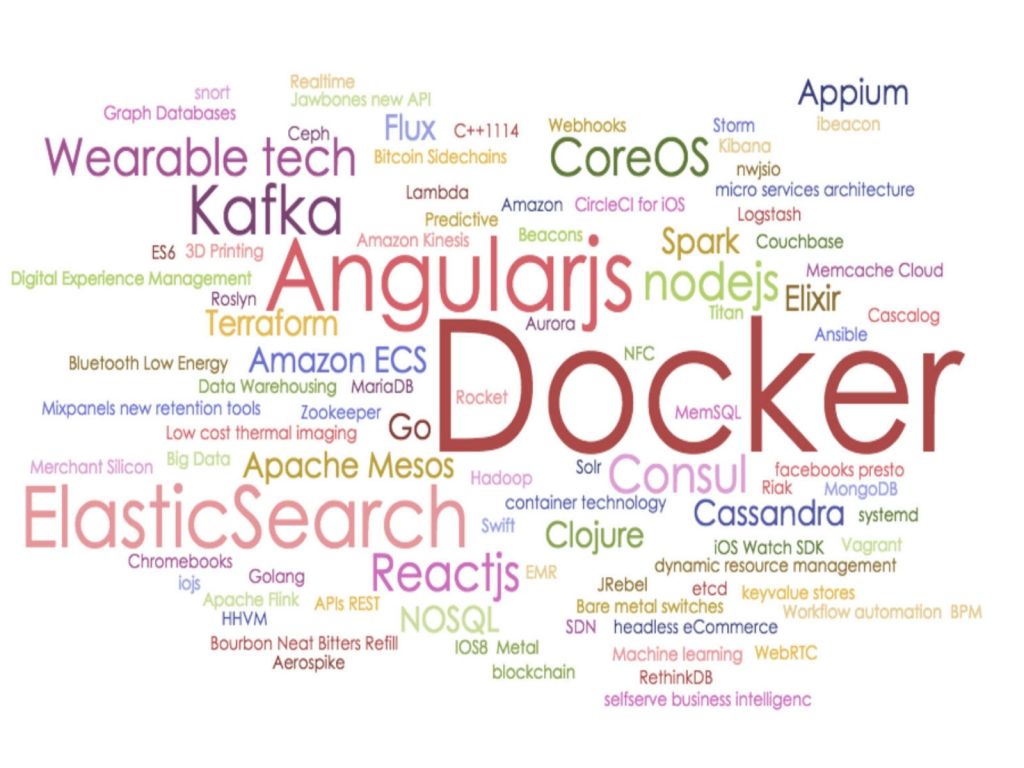

The wonderful thing about good open source projects is that, like consumer applications, they spread virally. At Index, we regularly run a survey within our portfolio of companies about the infrastructure that they use. We ask CTOs and CIOs what their primary tools are and what new technologies are trending upwards. The tag cloud below is the visualization of the survey response from late 2014. Kafka had become one of the top “hot technologies”.

About a year ago, Jay, Neha and Jun decided that the opportunity for what they had created within LinkedIn was so significant that it was time for them to build a company around it. They worked with LinkedIn (who is an investor in the company) to spin out their work and launch Confluent, Inc.

Because Kafka was born in the modern big data environment, it is designed to simplify the management of massive real-time data streams. The product is a central hub that acts as an organization's nervous system. It supports hundreds of applications built by disparate teams. It’s also scalable, reliable enough to handle critical updates, such as replicating database changelogs, and features a stream processing framework that integrates easily and makes data available for real time processing. Kafka can even do light-weight data transformations to patch between publishers and consumers of data. In fact, Kafka has built up quite the list of users in a very short period of time.

Today, we are delighted to announce that Index is leading the $24M Series B in Confluent.

For the longest time, I thought that the Mario Brothers would be the sexiest plumbers of all time, but I think I’ve changed my mind: Jay, Neha and Jun are giving Mario and Luigi a serious run for their money.

(Image courtesy of http://mario.wikia.com/)

Posted on 08 Jul 2015

Published — July 8, 2015